Updated Safety Report Released Amid Autopilot Lawsuits

In the midst of two prominent court cases related to its “Autopilot” driver-assist system, Tesla has released an updated safety report doubling down on claims that the system improves road safety by a significant margin.

Tesla has been voluntarily releasing quarterly data on crashes involving its vehicles since 2018, and did so again this week along with its Q2 financial disclosures. The automaker said cars using Autopilot traveled over six million miles between crashes during the second quarter of this year, while Tesla cars not using the system traveled just under one million miles between crashes. Tesla claims even that lower figure is a bit higher than the national average.

In the disclosure, Tesla noted that the number of crashes can vary from quarter to quarter, and can be affected by seasonal weather conditions. But the self-reported Q2 data is in line with what Tesla has reported for recent quarters, with the Autopilot-enabled fleet generally traveling much farther between crashes than Tesla vehicles not using Autopilot, as well as the average for other cars on United States roads.

But this single statistic, which again comes from data self-reported by Tesla rather than independent analysis, doesn’t tell the entire story. Two lawsuits allege that Autopilot could pose a safety risk in its own right.

First Federal Autopilot Case Heads To Trial

Tesla

This week, a federal court in Miami started hearing a wrongful death case involving Autopilot. In April 2019, a Tesla driven by George McGee sped through a stop sign in the Florida Keys and struck Naibel Benavides and Dillon Angulo, who were standing near the road stargazing. Benavides was killed, while Angulo was left with a brain injury.

Tesla has faced Autopilot-related lawsuits before. Among the most high-profile were the suit over the 2018 death of Walter Huang, who was killed when his Tesla Model X steered itself into a concrete highway divider, and the 2019 case involving the death of Jeremy Banner, whose Tesla collided with a tractor trailer. Those cases were settled out of court; this is the first time a federal court has heard a case alleging Tesla Autopilot caused a fatal crash.

As reported by Ars Technica, one of the experts called on to testify in the Miami trial took issue with Tesla’s crash-data reporting methodology. Mendel Singer, a statistician at Case Western Reserve University, said that he was “not aware of any published study, any reports that are done independently… where [Tesla] actually had raw data and could validate it.”

Singer also noted that Tesla is not making an apples to apples comparison to the crash rates of competitors’ vehicles, as non-Tesla crashes are counted based on police reports, which don’t make note of what driver-assist tech might (or might not) have been used, and don’t include any analysis of data pulled from the vehicles themselves.

California Gets Serious

On the opposite side of the country, the California DMV is asking a court to suspend Tesla’s sales license for 30 days, as well as seeking financial penalties, over what the DMV alleges are misleading statements about Autopilot and Tesla’s more-sophisticated “Full Self-Driving” system which, to be clear, does not enable autonomous driving.

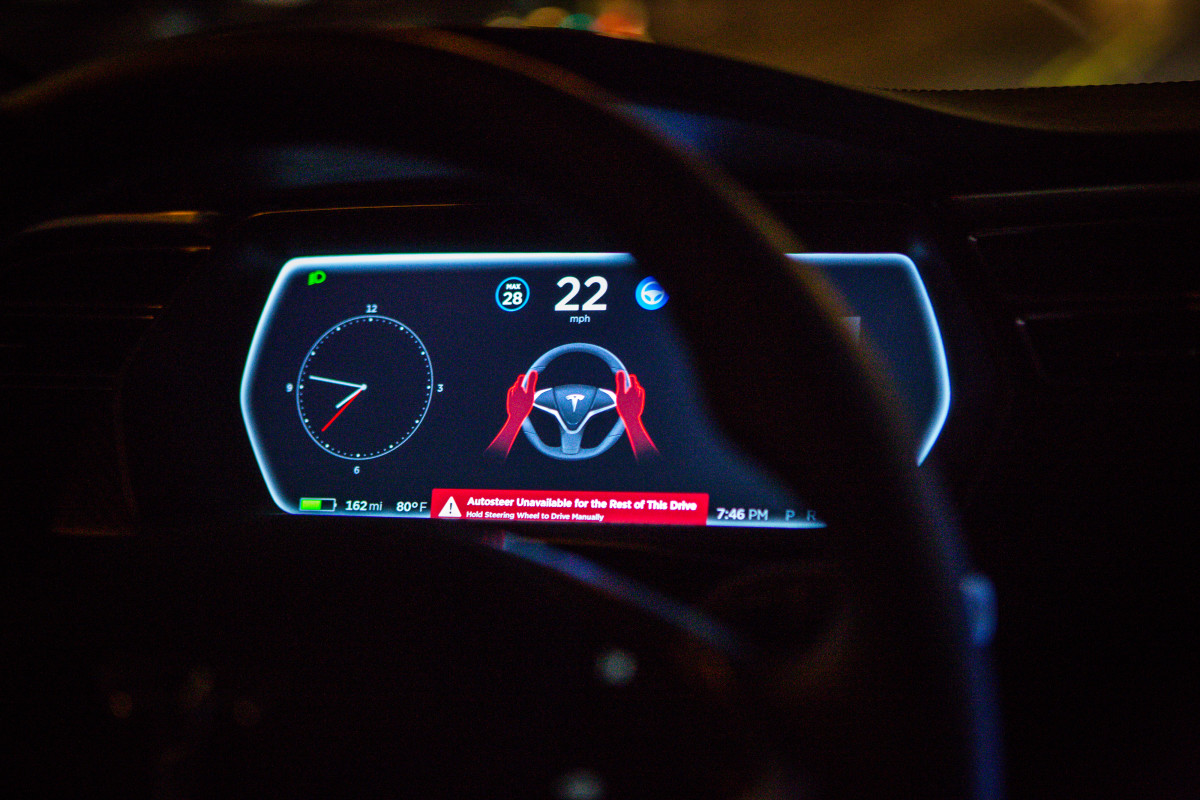

The California DMV is reportedly concerned not only with potentially-misleading names like Autopilot and Full Self-Driving, but also statements by Tesla implying capabilities these systems don’t have, such as one claiming that they are “designed to be able to conduct short and long-distance trips with no action required by the person in the driver’s seat.”

Tesla has reportedly argued that in-car warnings for drivers to stay alert at all times are sufficient to prevent any confusion about the systems’ capabilities. But while drivers may be reminded to pay attention once they get in the car, that only comes after being encouraged to put their full trust in these systems via advertising and unscientific safety reports like the one Tesla just released. The string of fatal Autopilot-related crashes shows how irresponsible this communications strategy can be.